This is Part 3 of a three part series on Jenkins, a popular automation tool that can unlock the power of CD/CI and DevOps workflows for the small/medium business, or for large enterprises. In Part 1, we looked at different approaches and options for installation and initial setup. In Part 2, we looked at what’s included with Jenkins and a brief explanation of configuration options, ending with a rudimentary build pipeline that serves as our starting point for Part 3, where we’ll look at some of the more advanced features, and a small sample of the myriad of options Jenkins can bring to your organization.

Setting Up Master/Slave Jenkins Nodes

Jenkins supports a master/slave topology, which can be useful for not only additional computing power, but can be configured to execute builds in multiple environments, for instance, Unix and Windows simultaneously, or test against multiple versions of Windows as part of a testing methodology. The possibilities are many.

To set up a *nix instance as a Jenkins slave, select ‘Manage Jenkins’ -> ‘Mange Nodes’. Select ‘New Node’, and supply a name.

Select ‘Permanent Agent’, which is appropriate for adding a physical host to a Jenkins topology. On the next page, set the number of executors (one or greater), a remote root directory, for instance, ‘/opt/jenkins’, set a Usage case for the node, Launch method, and availability.

To configure a Windows host as a Jenkins slave, follow all steps as above from the Jenkins Master UI, with a browser on the slave machine. While provisioning the new node (this is using the dumb slave approach), select ‘Launch Slave Agent via JNLP’ as the launch method.

Once completed and saved, the ‘Manage Nodes’ menu should now show the entry that was just configured. Select it, and options on how to connect the Jenkins slave should be presented: Launch from browser, run from slave CLI, or a modified CLI option if the slave is headless. In this case, click ‘Launch from browser on slave’. This should trigger a prompt to save a file to the local machine called ‘slave-agent.jnlp’, and should be saved in the root directory configured for the slave (something like D:JenkinsSlaves).

Once downloaded, execute javaws http:///slave-agent.jnlpfrom CLI. This should instantiate a new window, with a prompt to install the slave agent as a Windows Service. Choose ‘OK’. Once complete, the Jenkins master should now show the Windows slave as connected via JNLP, indicating this server is ready to accept build jobs.

Assuming our Jenkins topology now contains at least one valid slave node, executing a build on a slave machine is a matter of configuring the ‘Restrict where this project can be run’ directive. The name of the slave machine serves as the Label Expression to configure, so if a host it set up as ‘ExampleNode’, using ‘ExampleNode’ as an expression will limit execution to this slave.

Parallel Execution of Linux/Windows tests

With this configuration in place, a rudimentary pipeline may look something like the following, where the label ‘Windows’ will execute exclusively on Jenkins slaves with the corresponding label, as with ‘Linux’:

pipeline {agent nonestages {stage(‘Run Tests’) {parallel {stage(‘Test On Windows’) {agent {label “windows”}steps {bat “run-tests.bat”}post {always {junit “**/TEST-*.xml”}}}stage(‘Test On Linux’) {agent {label “linux”}steps {sh “run-tests.sh”}post {always {junit “**/TEST-*.xml”}}}}}}}This example also introduces another incredibly powerful concept: as of Pipeline 1.2 and later, Jenkins fully supports parallel pipeline execution, where each task, for instance, a build on windows and linux, happen asynchronously. With this in mind, it may be easy to imagine a scenario where every supported OS vendor is tested against before a build makes it to QA, creating a degree of confidence in the current build, as well as allowing QA to spend more time on meaningful edge cases, rather than working around bugs that could be caught early with automation.

At the time of this writing, it was recently announced that Microsoft and Red Hat would be partnering in an effort to bring support to windows containers. It may soon be possible to automate testing across a broad array of Windows containers, cleanly initialized per test iteration, inside a platform such as OpenShift.

Docker Containers for Build Slaves

It may be advantageous in some environments to use docker containers as build slaves. This is possible via the remote API on the Docker host. Instructions for how to enable this functionality can be found here. The dependencies for slave images are as follows: 1) SSHd service running 2) Jenkins has an account to authenticate to, 3) Required dependencies have been met for the build process. For a Mavenized Java project, this would mean git, java, and maven are available on the system. It is not necessary to install Jenkins itself on the slave.

Once tested and working, from the Jenkins master, select ‘Mange Jenkins’, then ‘Manage Plugins’. Search for, and install the Docker Plugin, and once installed select ‘Configure System’ from within the ‘Mange Jenkins’ window. As the last configuration option, “cloud”, parameters will be available to communicate to the docker host, including a Name, Docker URL (or IP address), credentials, timeout values, and a maximum container cap.

When complete, select “Add Docker Template”, and from the contextual options, fill in the “docker template” with the appropriate values for the environment. At a minimum, these will include the Docker Image name, instance capacity, remote system root, labels, Usage, and a launch method with associated credentials.

Job types that allow for the ‘Restrict where this project can be run’ option may now accept the label configured for the slave instance. This configuration will now instantiate a container, execute configured pipeline build steps, and remove the container once complete. This may open the door for more complex testing against an ephemeral host.

Additional Plugins (Pull request builder) — Automatic Code Check-in workflow

Yet another function that can be configured with Jenkins is the ability to automatically create a git pull-request, conditionally, upon a code-commit, successful test, and successful test build on a target operating system, per branch. Jenkins achieves this via a plug-in called the GitHub Pull request building, among a few other dependencies, detailed below. Once complete, this workflow simplifies the process of creating a pull-request to submit working changes back to a target development branch. The result of the below should be an automated build, automated testing, and the option for one-click merging conditional upon a successful exit code. All of this is achieved via an ephemeral Docker instance used as an ephemeral containerized image from which to test the build.

Plugin Dependencies, which can each be installed by navigating to ‘Manage Jenkins’ > ‘Manage Plugins’. These will need to be individually searched and installed from the Jenkins plugin manager interface.

GitHub Plugin

GitHub Authentication Plugin

GitHub Pull Request Builder

Post Build Script Plugin

Parameterized Trigger

Matrix Project Plugin

Once the dependencies have been met, the example leverages two configured Jenkins jobs:

JobName: Builds on any pull request created

JobName-Base: Triggered by a pull request, builds the base branch when the Pull Request build succeeds

For :

Configure Name of Job, this is arbitrary

Specify GitHub URL, this will contain source code for the build

Choose ‘git’ for SCM, as we are using Git in this example

In this instance, Refspec is configured as follows:

+refs/heads/*:refs/remotes/origin/* +refs/pull/*:refs/remotes/origin/pr/*

More information about Refspec can be found here.

4) Set ‘Branch Specifier’ to $

5) Add a valid SSH credential via the Credentials menu to the build/test environment, if not local to the Jenkins instance.

Select the GitHub Pull Request Builder and add the following:

Commit Status Context: The name you’d like to display in SCM for pull requests when running the job.

List of organizations. Members of the organization are automatically whitelisted.

Select ‘Allow members of whitelisted organizations as admins’

Select ‘Build every pull request automatically without asking (Dangerous!)

Build Execute Shell:

#!/bin/bash +xset -e# Remove unnecessary filesecho -e “33[34mRemoving unnecessary files…33[0m”rm -f log/*.log &> /dev/null || true &> /dev/nullrm -rf public/uploads/* &> /dev/null || true &> /dev/null# Build Projectecho -e “33[34mBuilding Project…33[0m”docker-compose — project-name=$ build# Prepare test databaseCOMMAND=”bundle exec rake db:drop db:create db:migrate”echo -e “33[34mRunning: $COMMAND33[0m”docker-compose — project-name=$ run -e RAILS_ENV=test web $COMMAND# Run testsCOMMAND=”bundle exec rspec spec”echo -e “33[34mRunning: $COMMAND33[0m”unbuffer docker-compose — project-name=$ run web $COMMAND# Run rubocop lintCOMMAND=”bundle exec rubocop app spec -R — format simple”echo -e “33[34mRunning: $COMMAND33[0m”unbuffer docker-compose — project-name=$ run -e RUBYOPT=”-Ku” web $COMMANDThe script can be summarized as:

Clean un-needed files

Build docker container

Prepare test database

Run tests, or really anything desired.

Ensure ‘set -e’ is specified at the beginning of the file, which will instruct the script to fail if any step fails. If omitted, the script will only fail if the last step fails.

5. Add ‘Set Status “pending” on GitHub commit’ build step

6. Add a ‘Post-build Actions Execute Shell’ build step to remove dangling files

#!/bin/bash +xdocker-compose — project-name=$ stop &> /dev/null || true &> /dev/nulldocker-compose — project-name=$ rm — force &> /dev/null || true &> /dev/nulldocker stop `docker ps -a -q -f status=exited` &> /dev/null || true &> /dev/nulldocker rm -v `docker ps -a -q -f status=exited` &> /dev/null || true &> /dev/nulldocker rmi `docker images — filter ‘dangling=true’ -q — no-trunc` &> /dev/null || true &> /dev/null7. Add a ‘Trigger Parameterized build on the other projects’ build step, set to trigger when the build is stable.

For :

This job is very similar to the one above, repeat the above steps 1–8 for this job, noting the differences highlighted below.

Specify the job name

Specify a repository URL for the project as above

Check the ‘This Build is parameterized’ and supply name:’sha1’, Default Value: ‘origin/development’ and description of choice, i.e. ‘Branch name (origin/development) or pull request (origin/pr/50/merge)

Select Git for SCM

DO NOT check GitHub Pull Request Builder

Copy the same Build Execute Shell from the first job

Copy the Post Build Action from the first job

DO NOT specify Trigger Parameterized build on other projects

Now, every time someone makes a pull request, will be triggered unconditionally, and will conditionally trigger on the success or failure of . The target branch here is ‘origin/development’, but this can be configured according to need.

Docker Compose Preparation:

8. The following Dockerfile should be checked into SCM at the root level, and will be executed upon invocation of Docker-Compose build. This is intended for a Ruby On Rails application, but can be changed and ported to suite any other application, as the architecture of the deployment remains the same.

FROM ruby:2.2.2RUN apt-get update -qq && apt-get install -y build-essential libpq-dev nodejs# Install RMagick# RUN apt-get install -y libmagickwand-dev imagemagick# Install Nokogiri# RUN apt-get install -y zlib1g-devRUN mkdir /myappWORKDIR /tmpCOPY Gemfile GemfileCOPY Gemfile.lock Gemfile.lockRUN bundle install -j 4ADD . /myappWORKDIR /myapp9. The following Docker-Compose.yml file prepares the services necessary to successfully run the app (e.g, database, webserver). Again, this file is aimed at RoR, but can be modified as needed to support another stack.

db:image: postgresports:- “5432”redis:image: redisports:- “6379”web:build: .command: bundle exec rails s -p 3000 -b ‘0.0.0.0’volumes:- .:/myappports:- “3000:3000”environment:- DB_USERNAME=postgres- DB_PASSWORD=links:- db# — redis# sidekiq:# build: .# command: bundle exec sidekiq# links:# — db# — redis# volumes:# — .:/usr/src/service10. For local development, some modifications may be required support a local docker instance. The following is a template for the database.yml file, in this example, these values should exist in /config/database.yml relative to the application root. The values for each field must be exported as environment variables on the host system.

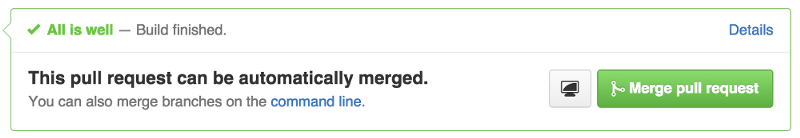

development: &defaultadapter: postgresqlencoding: unicodedatabase: your-project_developmentpool: 5username: <%= ENV.fetch(‘DB_USERNAME’, ‘your-project’) %>password: <%= ENV.fetch(‘DB_PASSWORD’, ‘your-project’) %>host: <%= ENV.fetch(‘DB_1_PORT_5432_TCP_ADDR’, ‘localhost’) %>port: <%= ENV.fetch(‘DB_1_PORT_5432_TCP_PORT’, ‘5432’) %>test: &test<<: *defaultdatabase: your-project_testWith all of these settings correctly implemented, every successful pull request will display something display the following directly in the repository:

Any pull request that does not satisfy the test suite in the build process will result in a build failed message, and will not be available for merging. Running containers locally can be accomplished by invoking ‘docker-compose run web’.

External Jenkins Master to OpenShift deployment

Many organizations may already have existing Jenkins infrastructure, and need to adapt it to work with containerized environments, such as Red Hats OpenShift Container Platform. Fortunately, it is possible to integrate an external Jenkins master instance with containerized slaves. This example will look at configuration requirements to do just that, and enable Jenkins to dynamically allocate resources and pods as needed.

Templates to enable this behavior can be found on GitHub, and cloned with:

‘git clone https://github.com/sabre1041/ose-jenkins-cluster’Us the oc client to create a new OCP project with which to house the resources needed.

‘oc new-project jenkins’From within the cloned directory, add the templates to the project with”

‘oc create -f support/jenkins-cluster-persistent-template.json,support/jenkins-cluster-ephemeral-template.json,support/jenkins-external-services-template.json’We have looked at a few examples of what is possible using Jenkins automation as part of the CD/CI and DevOps process. This is by no means a comprehensive list of Jenkins capabilities, but rather a quick primer of a few of the possibilities possible. With more Jenkins plugins being actively developed and published, Jenkins is likely to be relevant and dynamic automation platform into the future.